We present a real-time algorithm, SocioSense, for socially-aware navigation of a robot amongst pedestrians. Our approach computes time-varying behaviors of each pedestrian using Bayesian learning and Personality Trait theory. These psychological characteristics are used for long-term path prediction and generating proxemic characteristics for each pedestrian. We combine these psychological constraints with social constraints to perform human-aware robot navigation in low- to medium-density crowds. The estimation of time-varying behaviors and pedestrian personalities can improve the performance of long-term path prediction by 21%, as compared to prior interactive path prediction algorithms. We also demonstrate the benefits of our socially-aware navigation in simulated environments with tens of pedestrians.

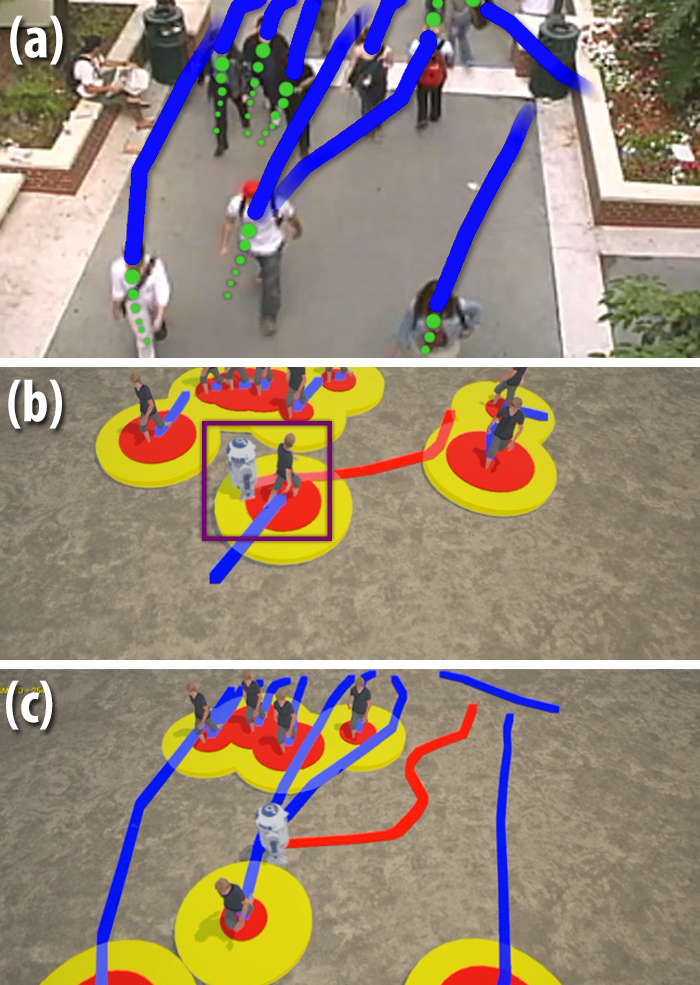

Fig: Improved Navigation using SocioSense: (a) shows a real-world crowd video and the extracted pedestrian trajectories in blue. The green markers are the predicted positions of each pedestrian computed by our algorithm that are used for collision-free navigation. The red and yellow circles around each pedestrian in (b) and (c) highlight their personal and social spaces respectively, computed using their personality traits. We highlight the benefits of our navigation algorithm that accounts for psychological and social constraints in (c) vs. an algorithm that does not account for those constraints in (b). The red trajectory of the white robot maintains these interpersonal spaces in (c), while the robot navigates close to the pedestrians in (b) and violates the social norms.

Fig: Improved Navigation using SocioSense: (a) shows a real-world crowd video and the extracted pedestrian trajectories in blue. The green markers are the predicted positions of each pedestrian computed by our algorithm that are used for collision-free navigation. The red and yellow circles around each pedestrian in (b) and (c) highlight their personal and social spaces respectively, computed using their personality traits. We highlight the benefits of our navigation algorithm that accounts for psychological and social constraints in (c) vs. an algorithm that does not account for those constraints in (b). The red trajectory of the white robot maintains these interpersonal spaces in (c), while the robot navigates close to the pedestrians in (b) and violates the social norms.

We present a real-time algorithm to automatically classify the dynamic behavior or personality of a pedestrian based on his or her movements in a crowd video. Our classification criterion is based on Personality Trait Theory. We present a statistical scheme that dynamically learns the behavior of every pedestrian in a scene and computes that pedestrian's motion model. This model is combined with global crowd characteristics to compute the movement patterns and motion dynamics, which can also be used to predict the crowd movement and behavior. We highlight its performance in identifying the personalities of different pedestrians in low- and high-density crowd videos. We also evaluate the accuracy by comparing the results with a user study.

Fig: We highlight its application for the 2017 Presidential Inauguration crowd video at the National Mall at Washington, DC (courtesy PBS): (1) original aerial video footage of the dense crowd; (2) a synthetic rendering of pedestrians in the red square based on their personality classification: aggressive (orange), shy (black), active (blue), tense (purple), etc; (3) a predicted simulation of 1M pedestrians in the space with a similar distribution of personality traits.

Fig: We highlight its application for the 2017 Presidential Inauguration crowd video at the National Mall at Washington, DC (courtesy PBS): (1) original aerial video footage of the dense crowd; (2) a synthetic rendering of pedestrians in the red square based on their personality classification: aggressive (orange), shy (black), active (blue), tense (purple), etc; (3) a predicted simulation of 1M pedestrians in the space with a similar distribution of personality traits.

This project was funded by Intel, The Boeing Company and National Science Foundation

If you would like to use the dataset, please contact the authors (Aniket Bera and Dinesh Manocha) at ab@cs.unc.edu To see more work on motion and crowd simulation models in our GAMMA group, visit - http://gamma-web.iacs.umd.edu/research/crowds/