ISNN: Impact Sound Neural Network for Audio-Visual Object Classification

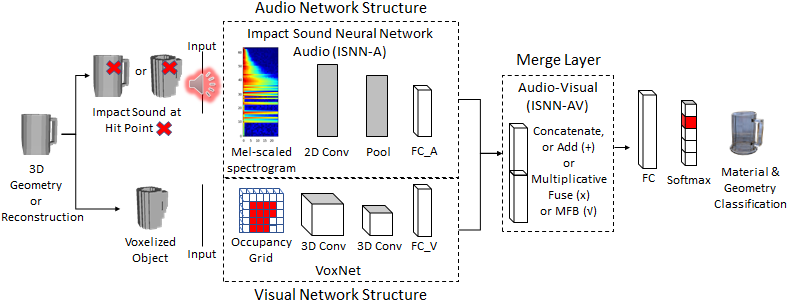

Our Impact Sound Neural Network - Audio (ISNN-A) uses as input a spectrogram of sound created by a real or synthetic object being struck. Our audio-visual network (ISNN-AV) combines ISNN-A with VoxNet to perform object geometry and material classification.

ABSTRACT

3D object geometry reconstruction remains a challenge when working with transparent, occluded, or highly reflective surfaces. While recent methods classify shape features using raw audio, we present a multimodal neural network optimized for estimating an object's geometry and material. Our networks use spectrograms of recorded and synthesized object impact sounds and voxelized shape estimates to extend the capabilities of vision-based reconstruction. We evaluate our method on multiple datasets of both recorded and synthesized sounds. We further present an interactive application for real-time scene reconstruction in which a user can strike objects, producing sound that can instantly classify and segment the struck object, even if the object is transparent or visually occluded.

PUBLICATION

ISNN: Impact Sound Neural Network for Audio-Visual Object Classification

European Conference on Computer Vision (ECCV) 2018

Auston Sterling, Justin Wilson, Sam Lowe, and Ming C. Lin

@InProceedings{Sterling_2018_ECCV,

author = {Sterling, Auston and Wilson, Justin and Lowe, Sam and Lin, Ming C.},

title = {ISNN: Impact Sound Neural Network for Audio-Visual Object Classification},

booktitle = {The European Conference on Computer Vision (ECCV)},

month = {September},

year = {2018}

}

Preprint PDF

Preprint Supplemental

DEMO VIDEO

DATASETS

RSAudio Sounds (.wav) and Geometries (.mat,.ply,.x3d)

ModelNet10 and ModelNet40 Sounds (.wav) and Geometries (.ply)