Effects of virtual acoustics on target-word identification performance in multi-talker environments

Atul Rungta

Nicholas Rewkowski

Carl Schissler

Philip Robinson

Ravish Mehra

Dinesh Manocha

University of North Carolina at Chapel Hill, Oculus Research, and University of Maryland at College Park

|

Abstract

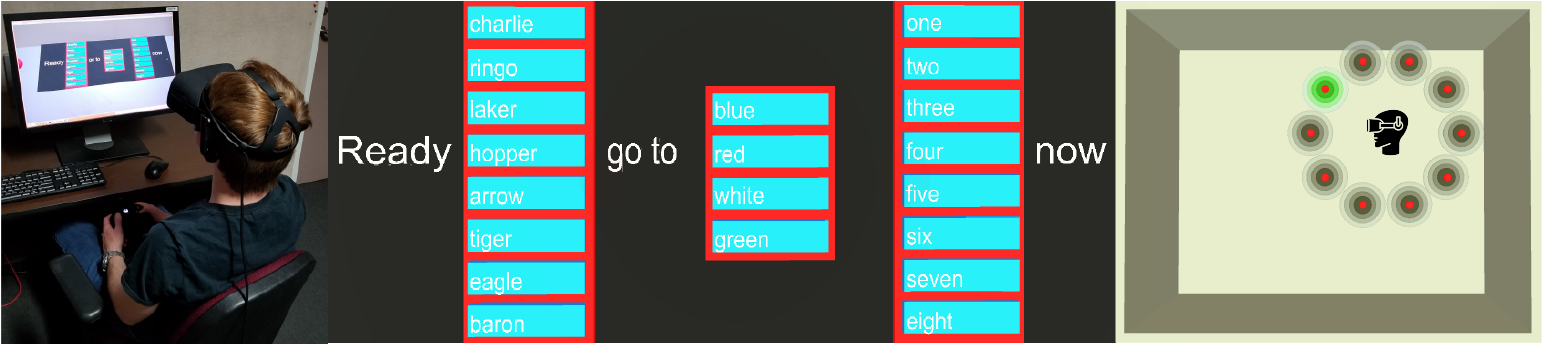

Many virtual reality applications let multiple users communicate in a multi-talker environment, recreating the classic cocktail-party effect. While there is a vast body of research focusing on the perception and intelligibility of human speech in real-world scenarios with cocktail party effects, there is little work in accurately modeling and evaluating the effect in virtual environments. Given the goal of evaluating the impact of virtual acoustic simulation on the cocktail party effect, we conducted experiments to establish the signal-to-noise ratio (SNR) thresholds for target-word identification performance. Our evaluation was performed for sentences from the coordinate response measure corpus in presence of multi-talker babble. The thresholds were established under varying sound propagation and spatialization conditions. We used a state-of-the-art geometric acoustic system integrated into the Unity game engine to simulate varying conditions of reverberance (direct sound, direct sound \& early reflections, direct sound and early reflections and late reverberation) and spatialization (mono, stereo, and binaural). Our results show that spatialization has the biggest effect on the ability of listeners to discern the target words in multi-talker virtual environments. Reverberance, on the other hand, slightly affects the target word discerning ability negatively.

Atul Rungta, Nicholas Rewkowski, Carl Schissler, Philip Robinson, Ravish Mehra, and Dinesh Manocha. Effects of virtual acoustics on target-word identification performance in multi-talker environments

Preprint (PDF, 1.4 MB), (ACM Symposium on Applied Perception 2018)