Interactive Sound Propagation and Rendering for Large Multi-Source Scenes

Abstract

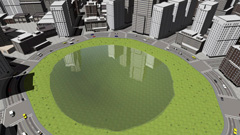

We present an approach to generate plausible acoustic effects at interactive rates in large dynamic environments containing many sound sources. Our formulation combines listener-based backward ray tracing with sound source clustering and hybrid audio rendering to handle complex scenes. We present a new algorithm for dynamic late reverberation that performs high-order ray tracing from the listener against spherical sound sources. We achieve sub-linear scaling with the number of sources by clustering distant sound sources and taking relative visibility into account. We also describe a hybrid convolution-based audio rendering technique that can process hundreds of thousands of sound paths at interactive rates. We demonstrate the performance on many indoor and outdoor scenes with up to 200 sound sources. In practice, our algorithm can compute over 50 reflection orders at interactive rates on a multi-core PC, and we observe a 5x speedup over prior geometric sound propagation algorithms.

Publication

Video

Download .mp4 (180 MB)