Feature-Guided Dynamic Texture Synthesis on Continuous Flows

Abstract

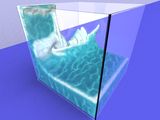

We present a technique for synthesizing spatially and temporally varying textures on continuous flows using image or video input, guided by the physical characteristics of the fluid stream itself. This approach enables the generation of realistic textures on the fluid that correspond to the local flow behavior, creating the appearance of complex surface effects, such as foam and small bubbles. Our technique requires only a simple specification of texture behavior, and automatically generates and tracks the features and texture over time in a temporally coherent manner. Based on this framework, we also introduce a technique to perform feature-guided video synthesis. We demonstrate our algorithm on several simulated and recorded natural phenomena, including river streams and lava flows. We also show how our methodology can be extended beyond realistic appearance synthesis to more general scenarios, such as temperature-guided synthesis of complex surface phenomena over a liquid during boiling.

Publication

Rahul Narain, Vivek Kwatra, Huai-Ping Lee, Theodore Kim, Mark Carlson, and Ming Lin. “Feature-Guided Dynamic Texture Synthesis on Continuous Flows.” Rendering Techniques 2007 (Proc. Eurographics Symposium on Rendering), pp. 361-370, 2007.

- Full paper (PDF, 2.0 MB)

- Color Plate (PDF, 0.6 MB)

- Video (DivX/XviD, 54 MB)